On Jan. 5, 2021, a user named llluminatiPirate, a self-proclaimed steward of the “old internet”, made a post on Agora Road (an image board style website which claims to be the “Best Kept Secret of the Internet”) titled, “Dead Internet Theory: Most of the Internet is Fake.” In it, Mr. Pirate puts forth the question: What if everything we see on the internet is created by artificial intelligence (AI), designed to influence global culture and keep us trapped in a circle of limited discourse?

In the lengthy post, he makes the following assertions:

- Somewhere around the year 2010, the CIA purchased and began using early versions of large language model (LLM) AI to write fake news stories and sow discord to their own ends.

- From there, companies like Google and Meta began using similar technology specifically designed to learn from and regurgitate human internet culture, this started to have noticeable effects around 2016.

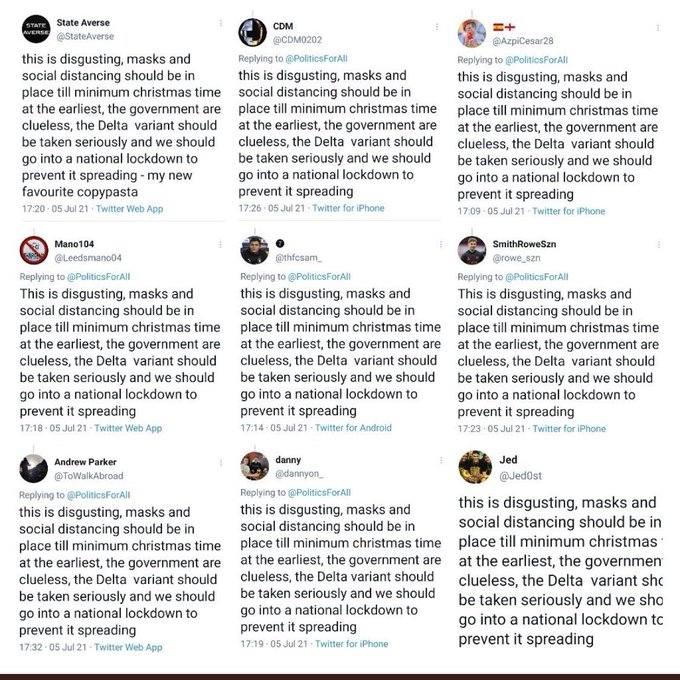

- Since then, the internet has increasingly become more overrun by bots who now make up the majority of content and traffic visible online.

“There is a large-scale, deliberate effort to manipulate culture and discourse online and in wider culture by utilising (sic) a system of bots and paid employees whose job it is to produce content and respond to content online in order to further the agenda of those they are employed by,” he mentions in his thesis statement.

Global thought control, the CIA up to no good; these premises are dazzling, attractive, and are more than likely rooted in historical truths – but they lack evidence observable to the average civilian, which is typical of these kinds of theories, and therefore easy to dismiss. But in between conspiratorial speculation and esoteric uses of the f-slur among other “old internet” jargon, IlluminatiPirate hits on a truth that most internet users probably agree with: The quality of the internet is getting worse, and it feels less and less human year after year.

Setting the deep state engineered new world order aside for just a moment, it is hard to deny that the internet today looks much different than it did even 10 years ago and is basically unrecognizable compared its predecessors like ARPAnet (the military funded, interdepartmental network developed to more easily share data between separate government agencies). But one does not need to go back so far to start noticing the differences.

The New York Times reported that at one point in 2013, “YouTube had as much traffic from bots masquerading as people as it did from real human visitors, according to the company.” While YouTube had (and still has) a system for detecting bot accounts and fake views, the system was unable to handle the volume of fake traffic they were dealing with. This led to YouTube’s engineers fearing a potential event that they coined “The Inversion.” This is the tipping point where bot traffic becomes so common on the platform, that the verification system begins to see real, human activity as abnormal and thus begins to ban real users instead of the fake ones.

YouTube’s inversion never came to be. Their teams were able to correct the issue before they lost control of the verification system, and now it is trained to keep bot traffic at <%1, or so they claim. But a near miss event like that begs the question: could something like “The Inversion” happen to the entire internet? Has it already happened? Users like IlluminatiPirate thought so back in 2021, and since then, it has become a more accepted explanation for why the internet feels so stale today.

I understand if you’re not willing to take IlluminatiPirate’s word for it. Although he touches on a kernel of truth in his outline of the Dead Internet Theory, his assertion that, “the U.S. government is engaging in an artificial intelligence powered gaslighting of the entire world population,” and his proclamations of love for sites like 4chan may be enough for you to dismiss the entire theory outright. However, we have reached the point where industry titans of the online sphere have begun to acknowledge that the theory (at its core) holds water.

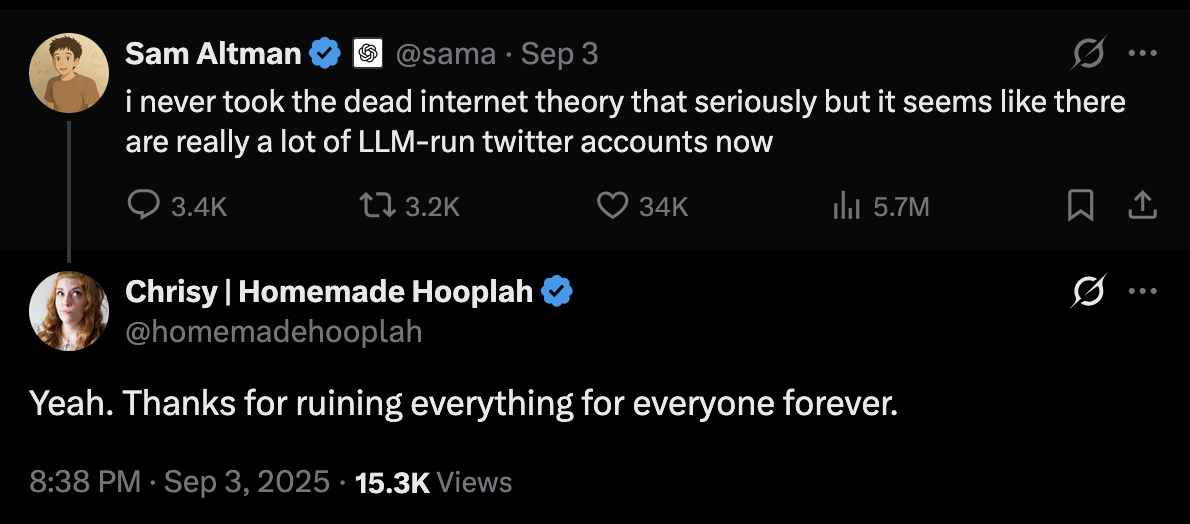

“i (sic) never took the dead internet theory that seriously but it seems like there are really a lot of LLM-run twitter accounts now,” tweeted Sam Altman, CEO of OpenAI (makers of ChatGPT), on Sept. 3 of this year. “Yeah. Thanks for ruining everything for everyone forever,” replied one user.

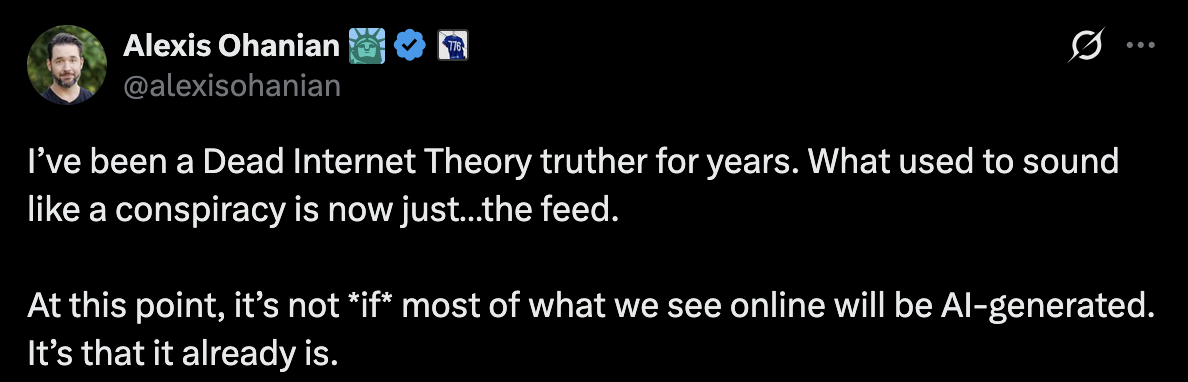

“I’ve been a Dead Internet Theory truther for years. What used to sound like a conspiracy is now just…the feed. At this point, it’s not *if* most of what we see online will be AI-generated. It’s that it already is,” tweeted Alexis Ohanian, co-founder of Reddit on June 8. Reddit, the self-proclaimed “Heart of the Internet” and social media message board has over 443 million active weekly users. Earlier this year, a cybersecurity firm called Imperva reported that 51% of all internet traffic in 2024 was generated by robots. I wonder how many of them use Reddit?

It used to be so simple to discern AI slop (as many now call it) from genuine, human made content. An extra finger here, an em dash there, a little comparison by negation and boom — it wasn’t just easy. It was obvious. And at least when it was obvious, the idea of more AI generated content felt tolerable. Being able to tell the difference at a glance was the comforting safe guard I could rely on. “Sure it can make a video of Will Smith scarfing spaghetti,” I would tell myself, “but it looks so surreal! It will never look like real life.” That’s what I was thinking back in 2020, when we all had some collective down time and the opportunity to take a closer look at what AI was really capable of at the moment. And based on that evidence, it was pretty easy (for me at least) to dismiss the idea that one day the internet would be overrun with AI traffic and that it could happen without us noticing.

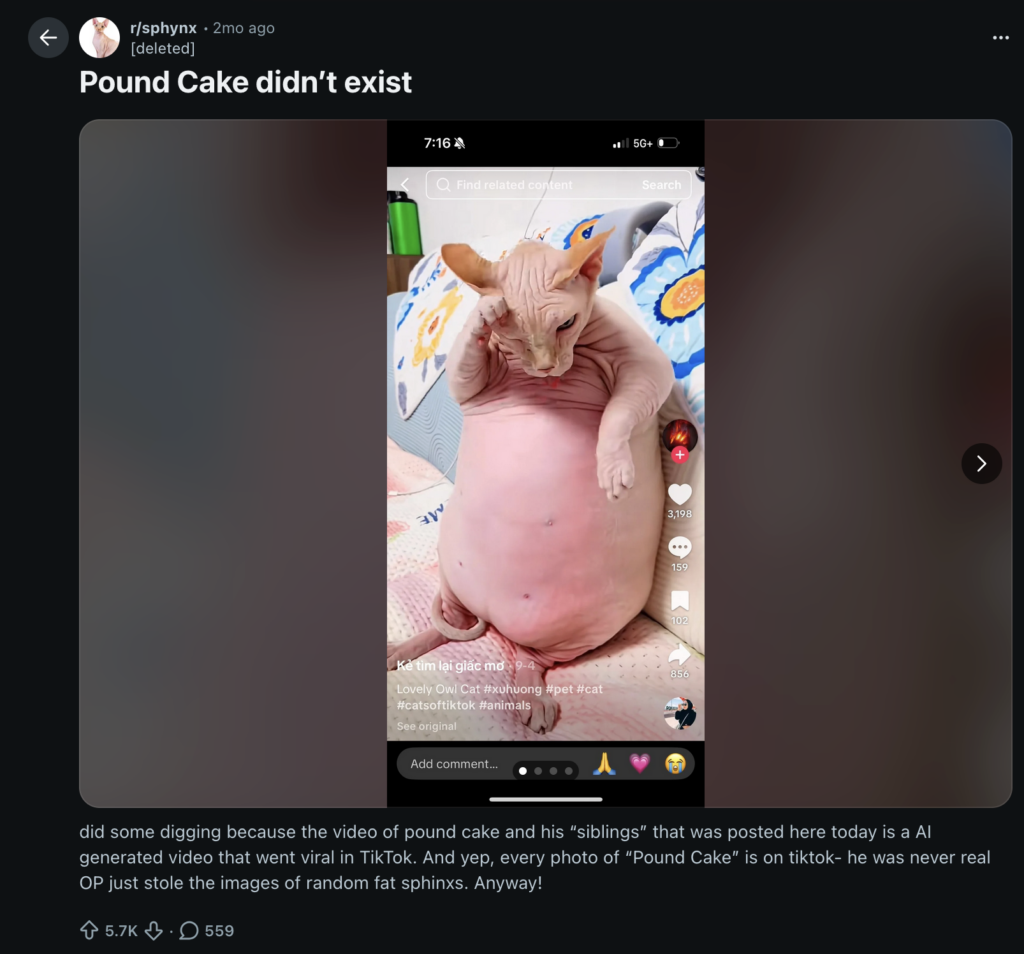

Fast forward to the end of 2025 and my optimism for the human ability to tell the difference has nearly evaporated. We’ve all been there. You shared a photo, a video, a funny story from your favorite subeddit, and you got this response, “That’s AI. Couldn’t you tell?” Absolutely mortifying. Why wasn’t I able to tell the difference right away? I imagine hundreds, if not thousands, of people are asking themselves that question every day now. In fact, thousands of Reddit users were fooled by a fake cat named Pound Cake for weeks before it was revealed to be an AI hoax.

In “The Loop” by Jacob Ward, he explains that human decision making can be broken down into two main systems: System 1 and System 2, “System 1 makes your snap judgments for you, without demanding conscious decisions…System 2 makes your careful, creative, rational decisions, which take valuable time and fuel to process.” Makes sense right? Humans have developed a flow chart for decision making in order to save time and energy., and the system is designed in such a way that System 2 only steps in when something seems seriously off. So as AI gets more and more advanced, it stops triggering our System 2 reactions. System 1 sees the AI content as real (or real enough to go undetected) and it isn’t until we take a closer look and consciously engage our System 2 thought process that we can see it as fake. So it makes sense that all those innocent Redditors (the human ones anyway) were fooled into thinking an overweight sphynx cat really was succeeding on his weight loss journey, because to their unconscious, System 1 minds, Pound Cake was indistinguishable from real life.

In light of the accelerating advancement of AI’s generative capabilities, and the growing acceptance that the online world might mostly be robots, I asked some Chabot students their thoughts on the Dead Internet Theory and how they feel about AI generated content.

Here’s what mass communications student, Amolak Singh, had to say:

Gloria Rodriguez, another mass-com student gives her input:

Chris Minkle, a Chabot theater arts major:

And Sam Valencia, a journalism student at Chabot:

Whether the Dead Internet Theory proves to be actually true, thanks to the influx of AI content and bot activity on the internet in the last five years, “the theory feels true,” as Kaitlyn Tiffany put it in her original Dead Internet story for the Atlantic. And as companies like Disney and Meta continue to pump billions into the AI industry, the internet (and all digital media) is only going to become more saturated with artificial content. What once was a place for people to connect, share independent thought, and explore around the world has become a virtual landfill for corporations to toss their cheaply made wares, much like they do in real life.

Here lies the Internet, 1991-2025. Gone before its time.

P.S. – One of the quotes above was AI generated. If you’re able to tell which one, congratulations and hold on to that feeling. If you aren’t able to tell — get used to it.